Data lakehouse Onehouse nabs $35M to capitalize on GenAI revolution

You can barely go an hour these days without reading about generative AI. While we are still in the embryonic phase of what some have dubbed the "steam engine" of the fourth industrial revolution, there's little doubt that "GenAI" is shaping up to transform just about every industry — from finance and healthcare to law and beyond.

Cool user-facing applications might attract most of the fanfare, but the companies powering this revolution are currently benefiting the most. Just this month, chipmaker Nvidia briefly became the world's most valuable company, a $3.3 trillion juggernaut driven substantively by the demand for AI computing power.

But in addition to GPUs (graphics processing units), businesses also need infrastructure to manage the flow of data — for storing, processing, training, analyzing and, ultimately, unlocking the full potential of AI.

One company looking to capitalize on this is Onehouse, a three-year-old Californian startup founded by Vinoth Chandar, who created the open source Apache Hudi project while serving as a data architect at Uber. Hudi brings the benefits of data warehouses to data lakes, creating what has become known as a "data lakehouse," enabling support for actions like indexing and performing real-time queries on large datasets, be that structured, unstructured or semi-structured data.

For example, an e-commerce company that continuously collects customer data spanning orders, feedback and related digital interactions will need a system to ingest all that data and ensure it's kept up-to-date, which might help it recommend products based on a user's activity. Hudi enables data to be ingested from various sources with minimal latency, with support for deleting, updating and inserting ("upsert"), which is vital for such real-time data use cases.

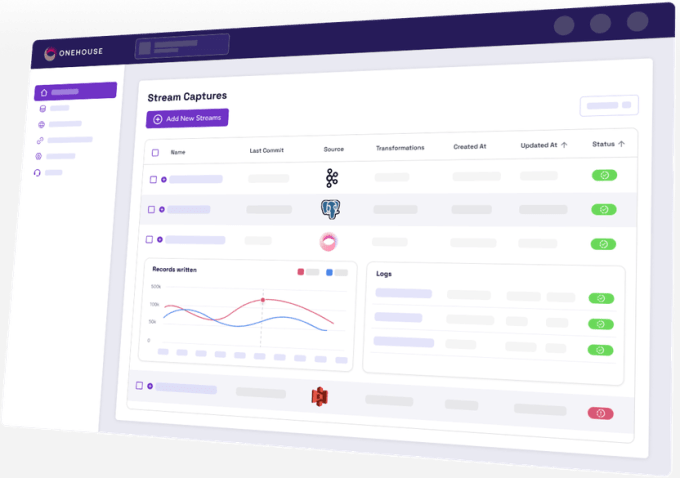

Onehouse builds on this with a fully managed data lakehouse that helps companies deploy Hudi. Or, as Chandar puts it, it "jumpstarts ingestion and data standardization into open data formats" that can be used with nearly all the major tools in the data science, AI and machine learning ecosystems.

"Onehouse abstracts away low-level data infrastructure build-out, helping AI companies focus on their models," Chandar told TechCrunch.

Today, Onehouse announced it has raised $35 million in a Series B round of funding as it brings two new products to market to improve Hudi's performance and reduce cloud storage and processing costs.

Down at the (data) lakehouse

Chandar created Hudi as an internal project within Uber back in 2016, and since the ride-hailing company donated the project to the Apache Foundation in 2019, Hudi has been adopted by the likes of Amazon, Disney and Walmart.

Chandar left Uber in 2019, and, after a brief stint at Confluent, founded Onehouse. The startup emerged out of stealth in 2022 with $8 million in seed funding, and followed that shortly after with a $25 million Series A round. Both rounds were co-led by Greylock Partners and Addition.

These VC firms have joined forces again for the Series B follow-up, though this time, David Sacks' Craft Ventures is leading the round.

"The data lakehouse is quickly becoming the standard architecture for organizations that want to centralize their data to power new services like real-time analytics, predictive ML and GenAI," Craft Ventures partner Michael Robinson said in a statement.

For context, data warehouses and data lakes are similar in the way they serve as a central repository for pooling data. But they do so in different ways: A data warehouse is ideal for processing and querying historical, structured data, whereas data lakes have emerged as a more flexible alternative for storing vast amounts of raw data in its original format, with support for multiple types of data and high-performance querying.

This makes data lakes ideal for AI and machine learning workloads, as it's cheaper to store pre-transformed raw data, and at the same time, have support for more complex queries because the data can be stored in its original form.

However, the trade-off is a whole new set of data management complexities, which risks worsening the data quality given the vast array of data types and formats. This is partly what Hudi sets out to solve by bringing some key features of data warehouses to data lakes, such as ACID transactions to support data integrity and reliability, as well as improving metadata management for more diverse datasets.

Because it is an open source project, any company can deploy Hudi. A quick peek at the logos on Onehouse's website reveals some impressive users: AWS, Google, Tencent, Disney, Walmart, ByteDance, Uber and Huawei, to name a handful. But the fact that such big-name companies leverage Hudi internally is indicative of the effort and resources required to build it as part of an on-premises data lakehouse setup.

"While Hudi provides rich functionality to ingest, manage and transform data, companies still have to integrate about half-a-dozen open source tools to achieve their goals of a production-quality data lakehouse," Chandar said.

This is why Onehouse offers a fully managed, cloud-native platform that ingests, transforms and optimizes the data in a fraction of the time.

"Users can get an open data lakehouse up-and-running in under an hour, with broad interoperability with all major cloud-native services, warehouses and data lake engines," Chandar said.

The company was coy about naming its commercial customers, aside from the couple listed in case studies, such as Indian unicorn Apna.

"As a young company, we don’t share the entire list of commercial customers of Onehouse publicly at this time," Chandar said.

With a fresh $35 million in the bank, Onehouse is now expanding its platform with a free tool called Onehouse LakeView, which provides observability into lakehouse functionality for insights on table stats, trends, file sizes, timeline history and more. This builds on existing observability metrics provided by the core Hudi project, giving extra context on workloads.

"Without LakeView, users need to spend a lot of time interpreting metrics and deeply understand the entire stack to root-cause performance issues or inefficiencies in the pipeline configuration," Chandar said. "LakeView automates this and provides email alerts on good or bad trends, flagging data management needs to improve query performance."

Additionally, Onehouse is also debuting a new product called Table Optimizer, a managed cloud service that optimizes existing tables to expedite data ingestion and transformation.

'Open and interoperable'

There's no ignoring the myriad other big-name players in the space. The likes of Databricks and Snowflake are increasingly embracing the lakehouse paradigm: Earlier this month, Databricks reportedly doled out $1 billion to acquire a company called Tabular, with a view toward creating a common lakehouse standard.

Onehouse has entered a hot space for sure, but it's hoping that its focus on an "open and interoperable" system that makes it easier to avoid vendor lock-in will help it stand the test of time. It is essentially promising the ability to make a single copy of data universally accessible from just about anywhere, including Databricks, Snowflake, Cloudera and AWS native services, without having to build separate data silos on each.

As with Nvidia in the GPU realm, there's no ignoring the opportunities that await any company in the data management space. Data is the cornerstone of AI development, and not having enough good quality data is a major reason why many AI projects fail. But even when the data is there in bucketloads, companies still need the infrastructure to ingest, transform and standardize to make it useful. That bodes well for Onehouse and its ilk.

"From a data management and processing side, I believe that quality data delivered by a solid data infrastructure foundation is going to play a crucial role in getting these AI projects into real-world production use cases — to avoid garbage-in/garbage-out data problems," Chandar said. "We are beginning to see such demand in data lakehouse users, as they struggle to scale data processing and query needs for building these newer AI applications on enterprise scale data."

Yahoo Finance

Yahoo Finance