Apple needs to make Siri fit into a ChatGPT world—Is it Mission Impossible?

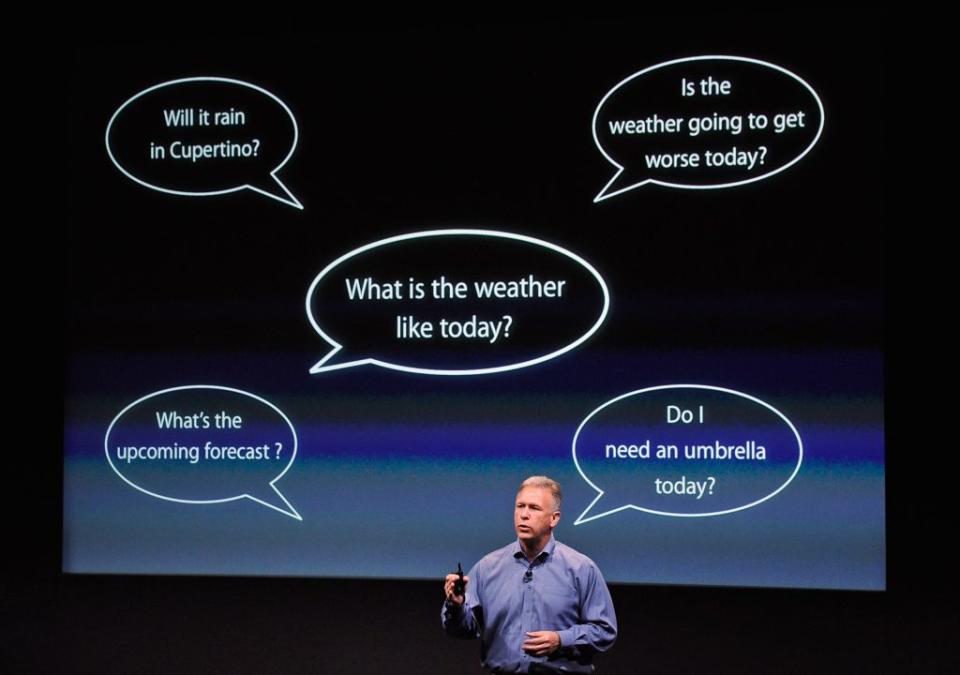

Apple’s Siri was the first virtual assistant to grace our smartphones, but it only took about five years before newer competitors like Google’s Assistant—which could more freely search the web for answers—made it seem a little clunky. Now, nearly 13 years after that 2011 launch, Siri is looking positively antiquated.

The advent of generative AI is to blame. Siri was always an AI assistant of sorts—machine learning, which is a subset of AI, is what gives it the ability to understand spoken commands and requests—but the world has come to expect so much more of AI, particularly since OpenAI's seminal release of the ChatGPT chatbot in late 2022.

Apple arch-rival Google, which has one of the leading large language models in the form of Gemini, is baking a host of AI capabilities into its Android operating system—most notably the Gemini chatbot itself, which is taking over from Assistant. And even on Apple's iOS, many people are now turning to apps for third-party chatbot like ChatGPT. Siri is increasingly out in the cold.

That may change on Monday, when Apple holds what’s likely to be its most consequential Worldwide Developers Conference (WWDC) in many years. Investors are clamoring for the company to more full-throatedly declare its enthusiasm for AI and, when it unveils iOS 18 next week, Apple will have to demonstrate that it has what it takes to at least keep up in the chatbot stakes. The obvious way to do so will be to unveil a smarter Siri—one that still acts as the familiar voice interface that iPhone owners have long used to access the device's functionality, but that could also understand and do much, much more.

But there’s a problem: Apple’s most advanced under-development large language model (LLM), reportedly known internally as Ajax, isn’t yet ready for primetime.

So, to stay in the game, Tim Cook’s company has reportedly struck a deal with Sam Altman’s OpenAI to integrate ChatGPT into iOS. Bloomberg reports that Apple is still talking to Google about using Gemini too—it may be that iPhone users end up with a panoply of chatbots powering the answers to their queries—but for now, it seems Cook will on Monday tout a mix of ChatGPT and Apple’s in-house AI efforts.

“Apple is playing catch-up with LLM technology, and it’s probable that their AI’s conversational abilities and, more importantly, cost, don’t yet meet what they can get from OpenAI,” said Forrester senior analyst Andrew Cornwall.

The limits of keeping AI 'on-device'

With the OpenAI deal now apparently a certainty, the biggest mystery about Apple’s near-term AI strategy is where it will draw the line between the capabilities offered by the cloud-based ChatGPT and those that it can offer locally on the iPhone or iPad itself. Reports suggest that an algorithm will determine which type of AI would be most appropriate, depending on the sophistication of the request.

Siri’s on-device nature is part of Apple’s big privacy pitch—that, unlike Google in particular, Apple is not interested in exploiting its users’ data. Indeed, this reluctance to learn too much about the user’s interests, and to keep what is learned away from third parties, is widely seen as a key reason why Siri’s capabilities remain so limited.

It is certainly possible to find some kind of middle ground. Apple’s mobile devices have since 2017 included a neural processing unit (NPU)—a specialized component for handling AI tasks—that the company calls the Neural Engine. This has allowed Apple to offer things like improved image and sound recognition, language translation and various health features. The newest iterations of the Neural Engine, reportedly the version in last year’s iPhone 15 Pro as well as whatever is inside this year’s iPhones, will be what enables Apple’s on-device generative AI.

But a tiny NPU will not be able to match the wild horsepower contained in the data centers powering OpenAI, which allows ChatGPT to provide such an uncanny simulacrum of human intelligence. So Apple will have to walk a tightrope between the privacy that its users have come to expect and the futuristic capabilities promised by a cloud-happy AI sector.

“Apple is likely to announce that much of the new Siri processing is done on-device,” said Cornwall, who suggested that the reported Apple-OpenAI deal “would be a stopgap measure to give Apple developers time to build an LLM that can run mostly on-device and inside Apple’s ecosystem.” As for requests that require OpenAI’s superior technology, Apple will probably route those through its own servers so any personal data can be anonymized, he added—a tactic that wouldn’t just conform to Apple’s privacy-first brand, but that would also “permit Apple to switch providers without disrupting its users.”

According to Bloomberg, the revamped Siri that Apple will unveil on Monday will be able to control the functions within apps on the user’s behalf, open documents, email web links, and summarize online articles. But even if some functions are using OpenAI’s technology, Apple will likely present them as improved “Apple capabilities” rather than “a front end to OpenAI,” Cornwall said.

Company pride aside, there are good reasons why Apple may want to avoid putting its OpenAI association front-and-center. Altman has become a highly controversial figure who allegedly kept OpenAI’s old board in the dark about that company’s plans, so they wouldn’t delay moves like the release of ChatGPT in the name of safety—his lack of openness with his overseers led to his brief ouster late last year. Although he won that tussle, former OpenAI employees continue to warn about an insufficient focus on safety.

Even if Apple does keep OpenAI at arm’s length, it also faces risks around ChatGPT’s propensity to confidently make up nonsense when answering questions—a problem called “hallucinating” that is shared to some extent by all LLMs, as demonstrated by the dangerously wrong answers that Google’s recently AI-imbued Search has been giving. Users may not welcome Siri’s newfound willingness to provide answers if those answers are wrong.

As for whether Monday’s reveal will be enough to convince Apple’s users and Wall Street that the iPhone remains both a cutting-edge and reliable device, the proof will be in the hands-on experiences that follow when iOS 18 becomes available for public testing in July, ahead of its full release around September.

This story was originally featured on Fortune.com

Yahoo Finance

Yahoo Finance