'The digital identity industry has forgotten about humans'

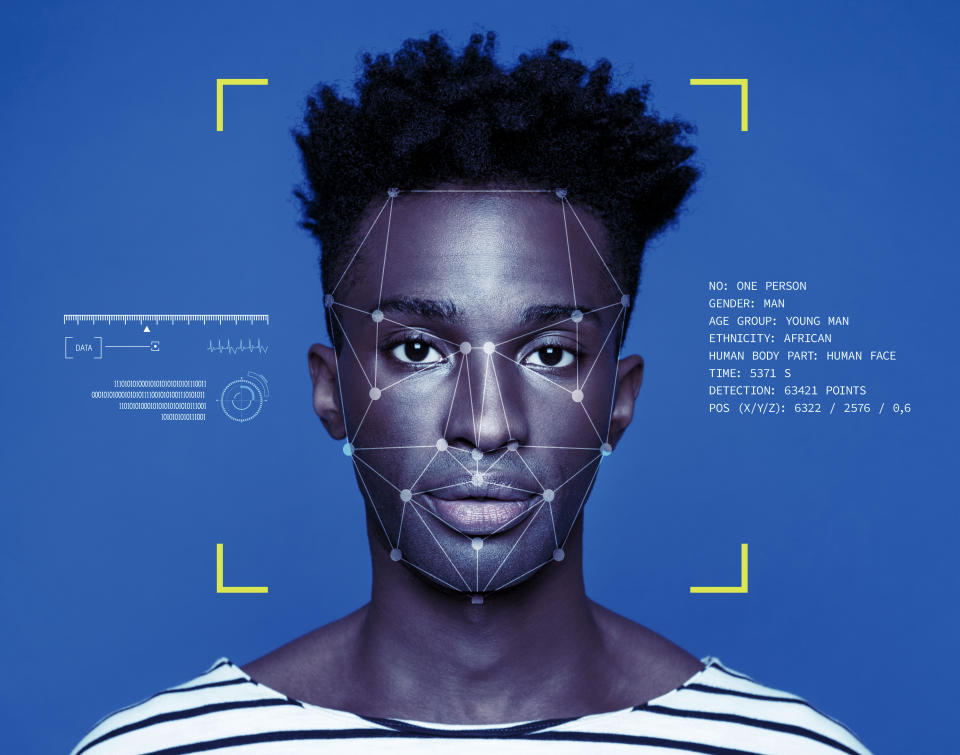

From facial recognition to biometric authentication, the digital identity industry develops the products that now serve as the gatekeepers in our everyday lives. But what happens when the algorithms that control access are biased?

“We’ve gone a little bit up our own backside with technology. And I think as an industry, we’ve forgotten about humans,” said Emma Lindley, the co-founder of Women in Identity, a startup that encourages companies working on identity products to have more diverse workforces.

Lindley observed that most biased algorithms arise when those making the systems don’t even think to ask the obvious questions — the ones that would ensure that identity products cater for everybody equally.

They might be all white men, or they might all come from the same socioeconomic background, she said.

And that, Lindley warned, is how the Home Office ends up deploying facial recognition technology that fails to recognise people with very dark or light skins.

For a government department, employing badly made identity products might create a national firestorm. But for fintech companies, Lindley noted, the customer will just move on.

“If you create a crap customer journey because the supplier that you've picked hasn't been thinking about the inherent bias that might be in their product, then your customers are just going to go somewhere else.”

READ MORE: Fintech and banking bosses debate the potential death of banks

Lindley therefore advised companies to ensure that they choose their suppliers carefully. “Don't assume that your supplier of identity products is thinking about making them work for everybody,” she said.

Lindley was speaking alongside Gail Kozlowski, the credit card lead at RBS, at a round-table discussion about security and identity at FinTECH Talents, a London-based fintech festival.

Asked about the complaints that algorithms used to set spending limits on Apple’s new credit card might be inherently biased against women, Kozlowski said that using automation and machine learning to come to decisions was “a great way of stripping out cost.”

But she said there should be someone to check if the algorithm was doing its job correctly.

“There should always be an element of human interaction to make sure that we are learning from the decisions that we have made and making sure that we're feeding it the right data,” she said.

Yahoo Finance UK is proud to be a media partner for FinTECH Talents — the ultimate global fintech festival in London 11-13 November 2019. There are 3,000 festival-goers + 1,500 innovators representing over 400 different financial services institutions + Rockstar speakers & steering committee members + Over 50 hours of content sessions + Game changing tech companies + The talent of tomorrow + Craft beer and live music sessions.

Yahoo Finance

Yahoo Finance